In this post, we’ll build on the previous post and explore how to save the daily LeetCode results into Azure Blob Storage using a second Azure Function, and trigger both functions using Azure Data Factory.

To follow with the post, you can check the code here

Project Overview

We’ll cover the following:

1 - Creating a second Azure Function to write the LeetCode summary to Azure Blob

2 - Setting up Azure Data Factory to trigger both functions daily

Azure Function to store LeetCode Data into Blob

Project Structure

The new function app has a very similar structure to our previous one:

.

├── README.md

├── host.json

├── requirements.txt

├── function_app.py

├── helpers

│ ├── azure_kv.py

│ ├── azure_blob.py

│ └── leetcode.py

└── settings.py

Writing to Azure Blob

Let’s have a look at the python code on how we implement the logic.

Inside helpers/azure_blob.py, we define a helper class to read and write JSON data into an Azure Blob container:

from azure.storage.blob import BlobServiceClient

from azure.identity import DefaultAzureCredential

import json

import logging

from settings import app_config

logger = logging.getLogger("azure")

logger.setLevel(logging.INFO)

class AzBlobStorage:

def __init__(self, container_name: str) -> None:

self.blob_service_client = BlobServiceClient.from_connection_string(

app_config.AZURE_BLOB_CONNECTION_STRING

)

self.container_client = self.blob_service_client.get_container_client(container_name)

def read_json(self, blob_name: str) -> dict:

try:

blob_client = self.container_client.get_blob_client(blob_name)

blob_data = blob_client.download_blob().readall()

return json.loads(blob_data)

except Exception as e:

logger.error(f"Error reading blob {blob_name}: {e}")

raise

def write_json(self, blob_name: str, data: dict):

try:

blob_client = self.container_client.get_blob_client(blob_name)

blob_data = json.dumps(data)

blob_client.upload_blob(blob_data, overwrite=True)

logger.info(f"Successfully wrote to blob {blob_name}")

except Exception as e:

logger.error(f"Error writing to blob {blob_name}: {e}")

raise

In leetcode.py, similar to last time, we add a function to fetch recent LeetCode submissions:

import requests

from settings import app_config

def fetch_recent_submissions(username, limit=30) -> dict:

query = """

query recentAcSubmissions($username: String!, $limit: Int!) {

recentAcSubmissionList(username: $username, limit: $limit) {

id

title

titleSlug

timestamp

}

}

"""

response = requests.post(

app_config.LC_GRAPHQL_URL,

json={"query": query, "variables": {"username": username, "limit": limit}},

headers={"Content-Type": "application/json"},

)

if response.status_code == 200:

return response.json()

else:

raise Exception(f"Query failed: {response.status_code} - {response.text}")

Writing Data with Azure Function

Finally, in function_app.py, we define our HTTP-triggered function:

import azure.functions as func

import logging

from datetime import datetime

from helpers.azure_blob import AzBlobStorage

from helpers.leetcode import fetch_recent_submissions

blob_storage = AzBlobStorage("data")

app = func.FunctionApp()

@app.route(route="leetcode-write-data", methods=["GET"])

def leetcode_write_data(req: func.HttpRequest) -> func.HttpResponse:

data = fetch_recent_submissions("midlcengineer")

blob_storage.write_json(f"lc-data-{datetime.now().strftime('%Y%m%d')}.json", data)

logging.info("Python HTTP trigger function executed.")

return func.HttpResponse("Leetcode summary executed successfully.", status_code=200)

2. Setting Up Azure Data Factory

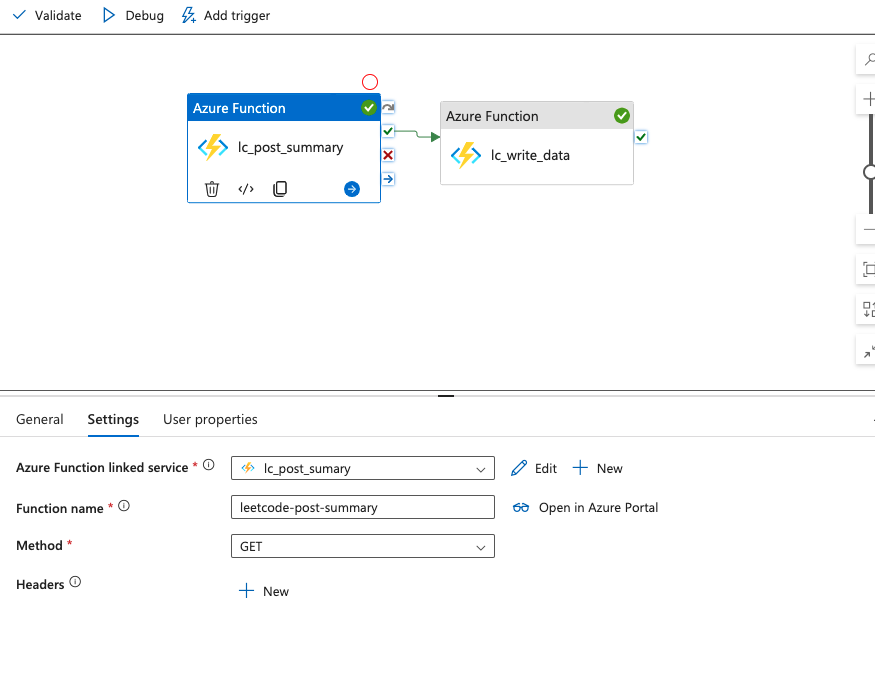

Let’s now trigger both our Azure Functions using a simple ADF pipeline.

Start by authoring a new pipeline in ADF and link the Azure Function services.

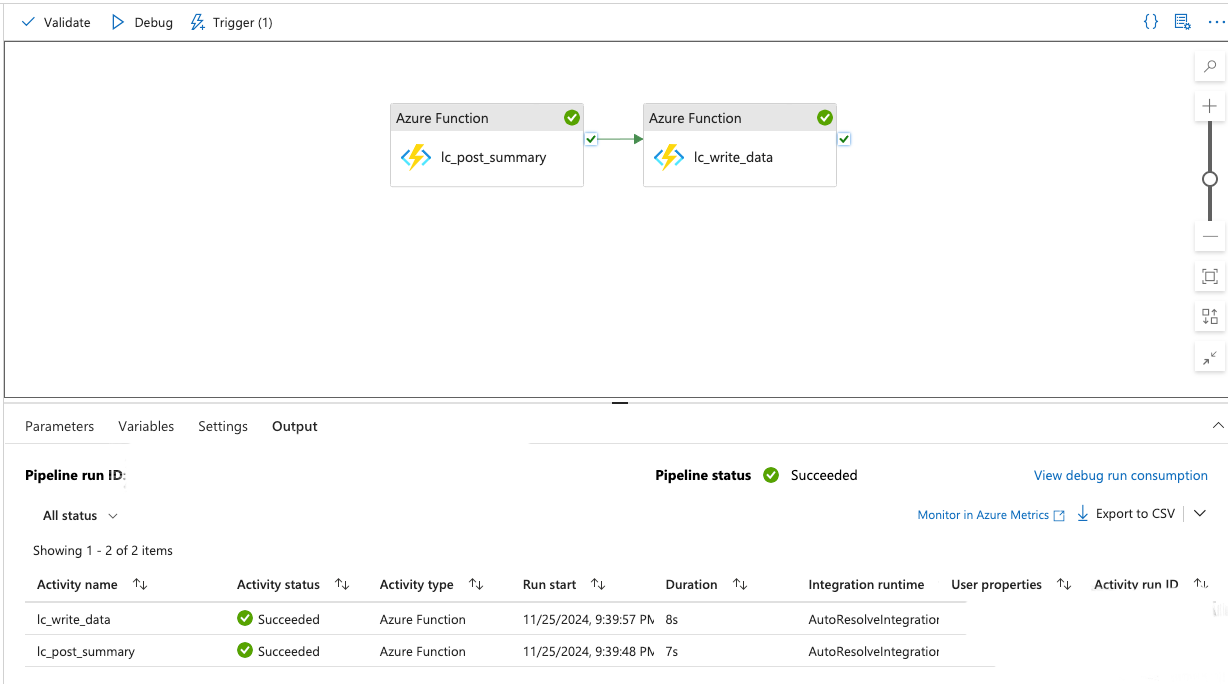

In this pipeline:

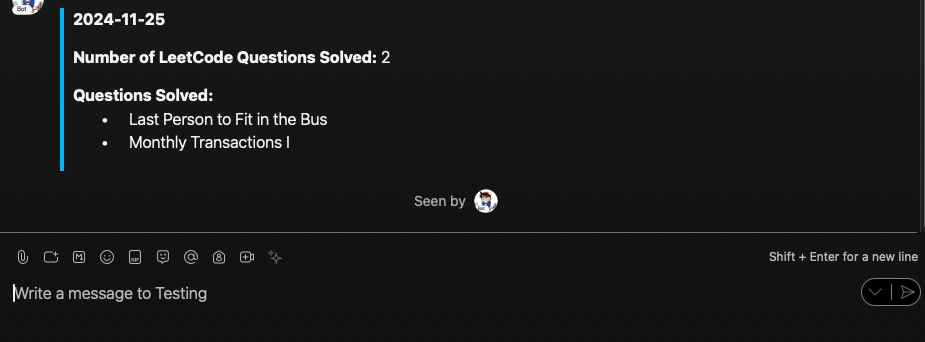

1 - The first activity is calling the leetcode-post-summary function (sends message to Webex)

2 - The second activity is calling the leetcode-write-data function (writes to blob)

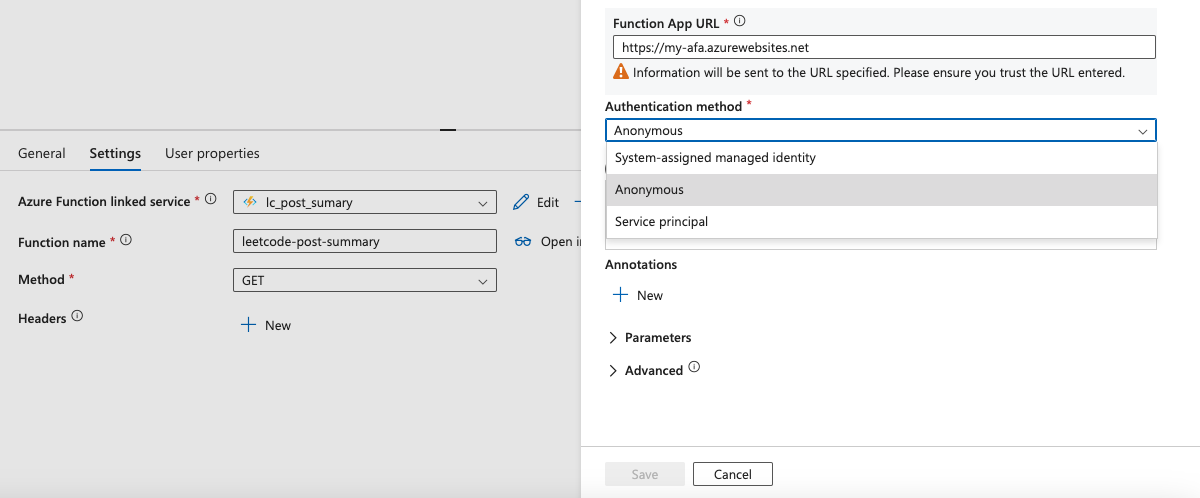

Make sure to configure the Function URL, method, and authentication (we use Anonymous here).

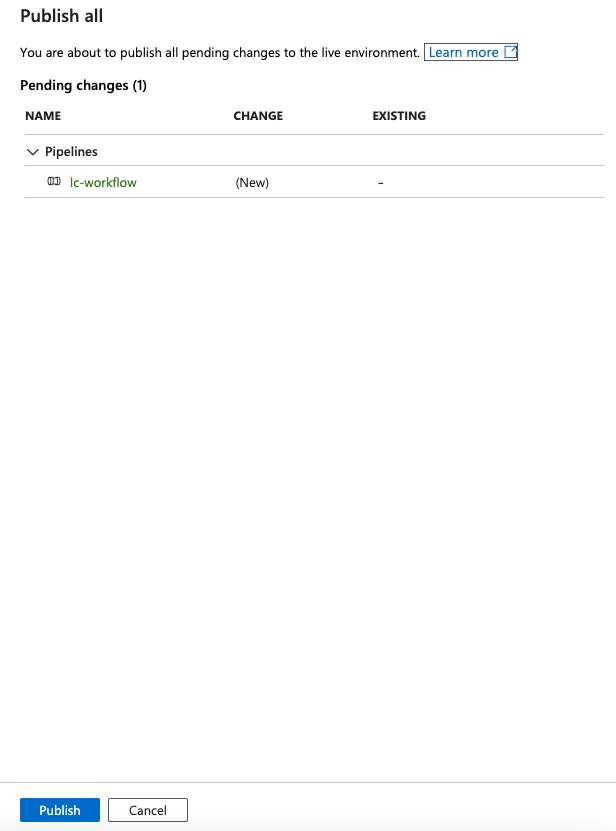

Publishing the Pipeline

Click Publish All to push your pipeline to the live environment.

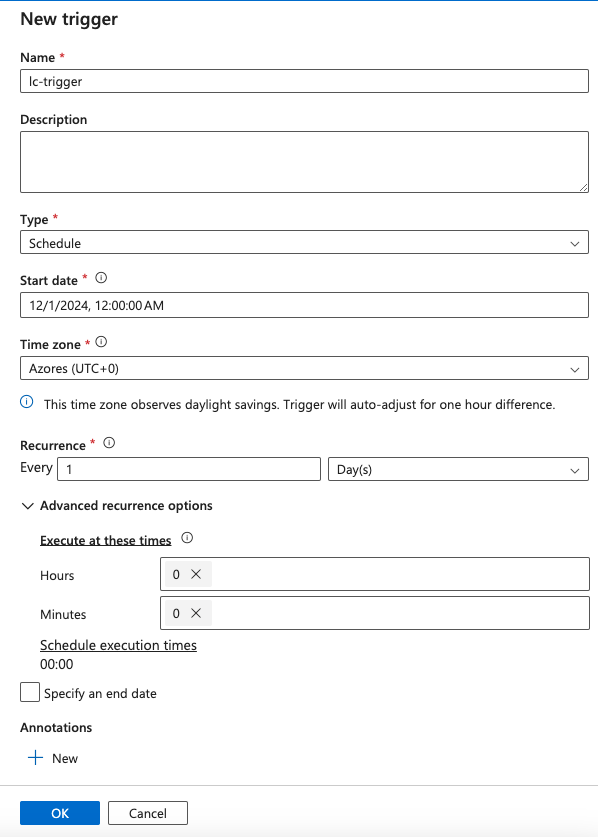

To automate daily execution, create a new trigger on a schedule.

This will execute both functions each day at midnight UTC, saving results and sending the summary to your Webex room.

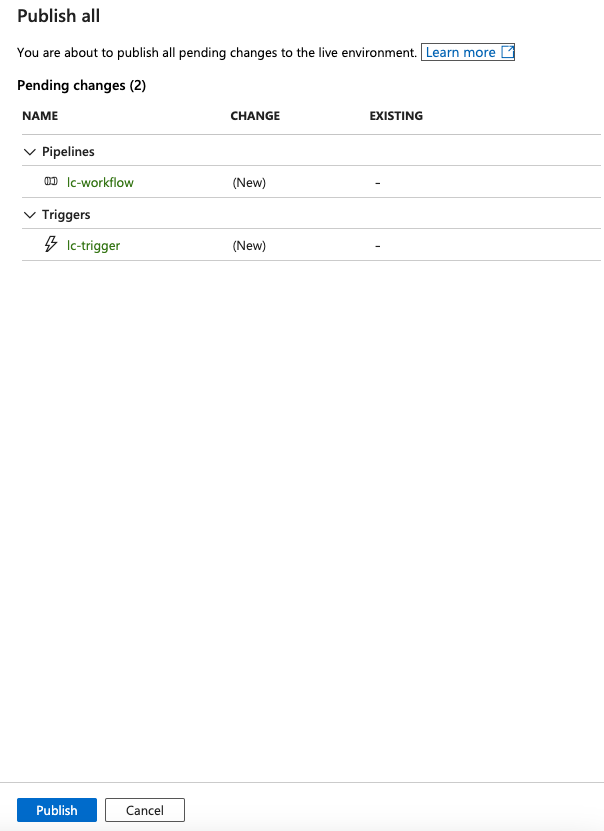

After configuring everything, you should see this final confirmation screen:

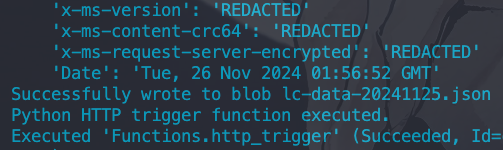

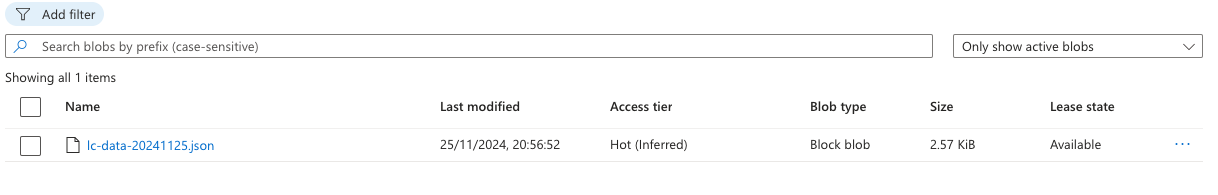

Blob Result Confirmation

After running, you can confirm that your blob was successfully written:

And that’s it! You now have a fully automated system that:

1 - Sends your daily LeetCode progress to Webex

2 - Stores the raw data in Azure Blob for long-term analysis

3 - Is triggered on a daily basis via Azure Data Factory

Thanks for reading!