Recently, I have been experimenting with Google’s Vertex AI Imagen using the API and Dall-e-3 using Azure. I have been trying to generate images of my own, comparing the results across the both models with the same prompts. In this post, I will be focusing on my experience with the two models and the results I have obtained.

Code Implementation

Prerequisites

pip install google-cloud-aiplatform

pip install openai pillow

Google Vertex AI Imagen

Let’s start with the code implementation of Vertex AI imagen, the below is one way of implementation

class GCPAI:

def __init__(self) -> None:

vertexai.init(project=GCP_PROJECT, location="us-central1")

self.SCOPES = ["https://www.googleapis.com/auth/cloud-platform"]

self.credentials = service_account.Credentials.from_service_account_info(

GCP_CREDS, scopes=self.SCOPES

)

self.img_gen_url = f"https://us-central1-aiplatform.googleapis.com/v1/projects/{GCP_PROJECT}/locations/us-central1/publishers/google/models/imagegeneration:predict"

self.img_bucket = gcsapi.get_gcs_bucket("bucket")

def img_gen(self, prompt: str) -> bytes:

authed_session = google.auth.transport.requests.AuthorizedSession(

self.credentials

)

data = {"instances": [{"prompt": prompt}], "parameters": {"sampleCount": 1}} # sampleCount 1-8

response = authed_session.post(self.img_gen_url, json=data)

response_data = response.json()

predictions = response_data.get("predictions", [])

image_data = predictions[0]["bytesBase64Encoded"]

return base64.b64decode(image_data)

def get_img_url(self, prompt: str, key_word: str) -> str:

data = self.img_gen(prompt)

url = gcsapi.upload_img(

f'{datetime.now().strftime("%Y%m%d")}/image.png',

self.img_bucket,

data,

)

return url

Dall-e-3

To use Dall-e-3 with Azure OpenAI API, you can either use Azure OpenAI client or use the rest API directly. The below is the implementation of using Azure OpenAI client.

class Dalle3AzureOpenAI:

def __init__(self) -> None:

self.azure_endpoint = azure_endpoint

self.url = url

self.client_id = client_id

self.client_secret = client_secret

self.app_key = app_key

self.payload = "grant_type=client_credentials"

self.value = base64.b64encode(f"{self.client_id}:{self.client_secret}".encode("utf-8")).decode("utf-8")

self.headers = {

"Accept": "*/*",

"Content-Type": "application/x-www-form-urlencoded",

"Authorization": f"Basic {self.value}",

}

self.token = requests.request("POST", self.url, headers=self.headers, data=self.payload).json()["access_token"]

def get_client(self):

return AzureOpenAI(

azure_endpoint=self.azure_endpoint,

api_key=self.token,

api_version="2023-12-01-preview",

)

def get_img_url(self, prompt: str) -> str:

client = self.get_client()

result = client.images.generate(

model="dalle3", # the name of DALL-E 3 deployment

user=f'{{"appkey": self.app_key}}',

prompt=prompt,

n=1

)

json_response = json.loads(result.model_dump_json())

return json_response["data"][0]["url"]

Image Results Comparison

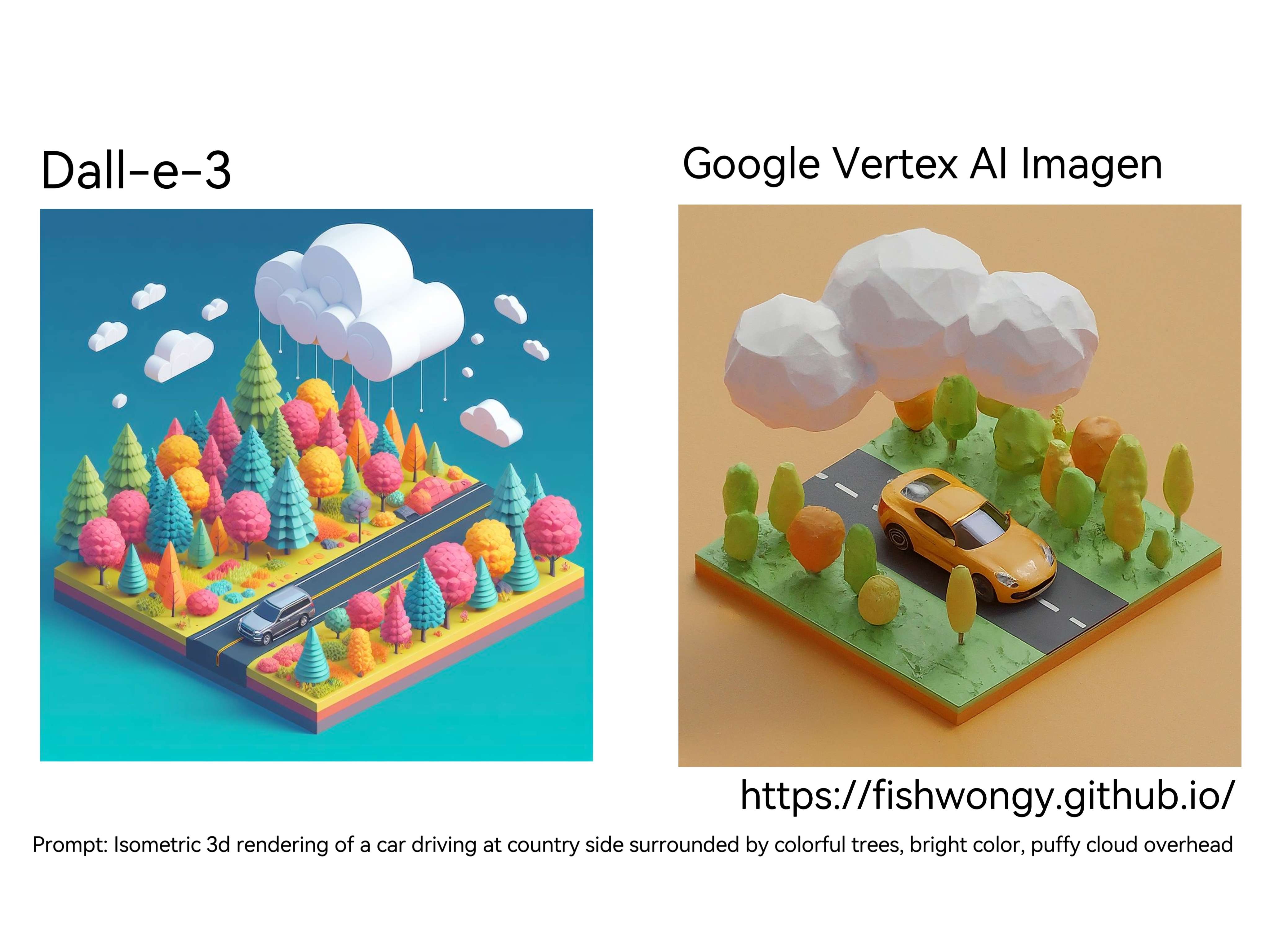

Prompt 1 - Isometric 3d rendering of a car driving at country side surrounded by colorful trees, bright color, puffy cloud overhead

I am impressed by the results of both models, the results are very close to my prompt, especially the Dall-e-3, the quality of the image is also very good.

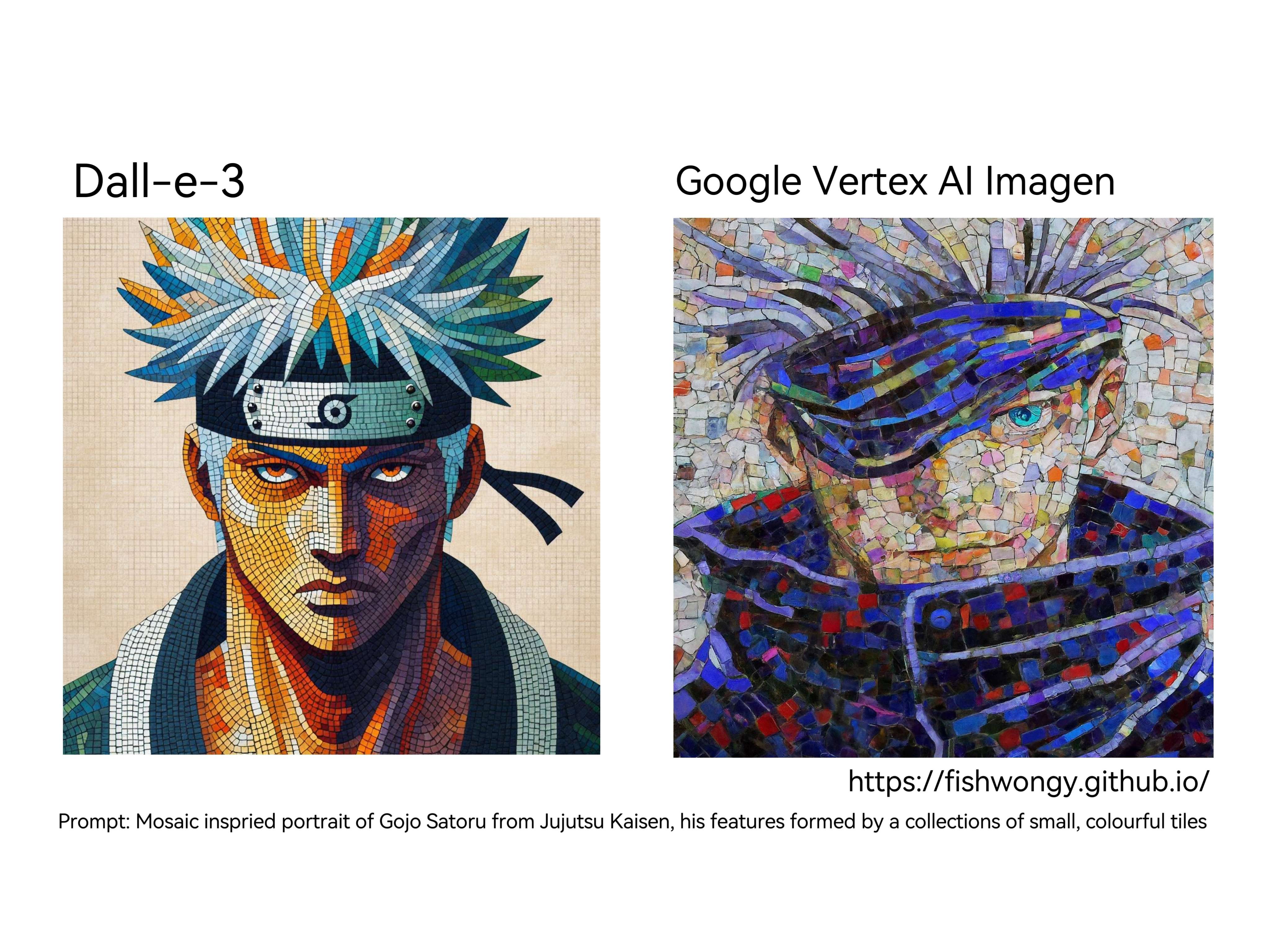

Prompt 2 - Mosaic inspried portrait of Gojo Satoru from Jujutsu Kaisen, his features formed by a collections of small, colorful tiles

For Dall-e-3, it is obvious Naruto instead of Gojo from Jujutsu Kaisen. I’ve tried a few time, Dall-e-3 either generated Naruto or some other anime characters like Dragon Ball, but never Gojo. I guess it is due to the lack of training data of Gojo.

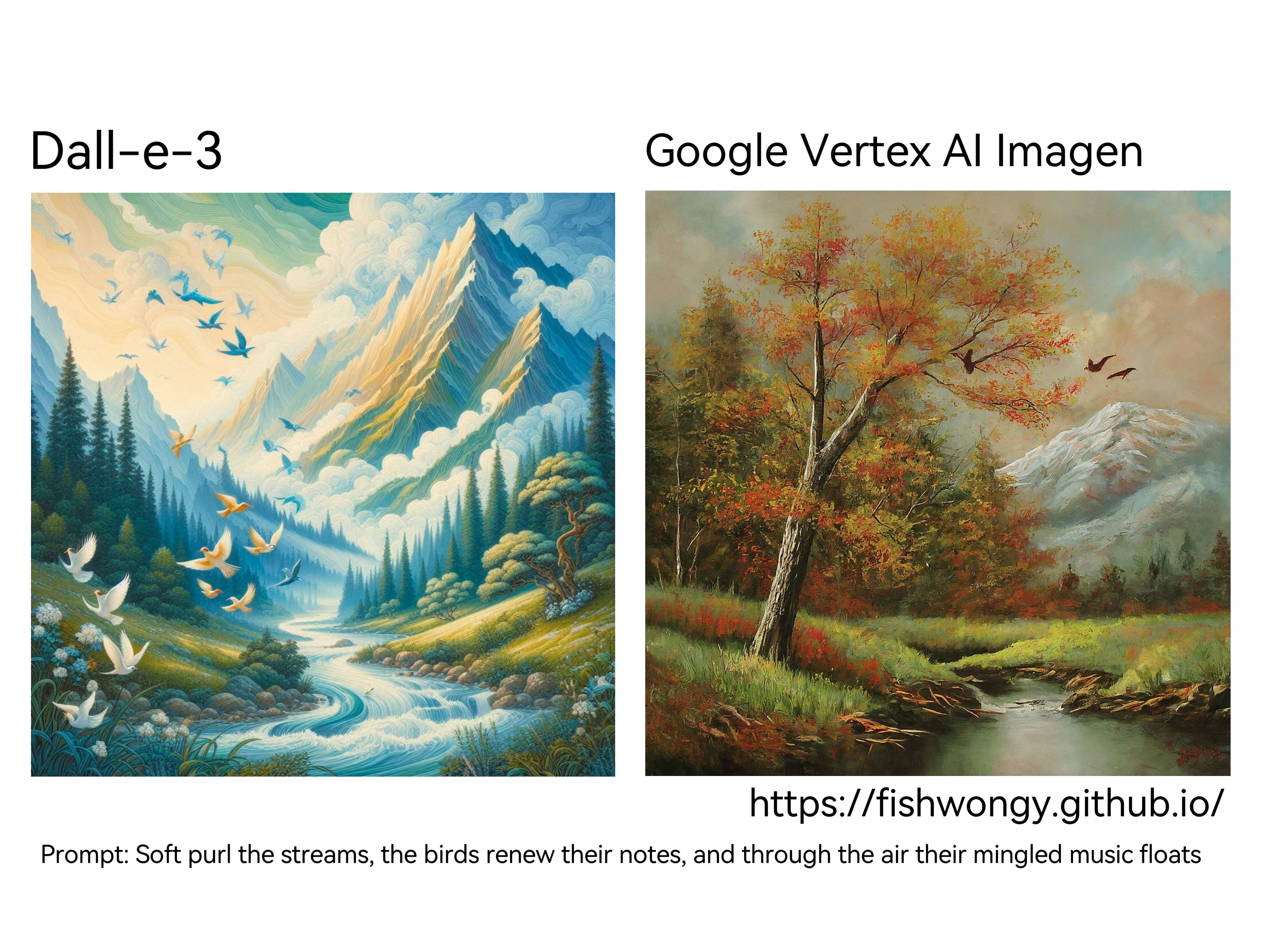

Prompt 3 - Soft purl the streams, the birds renew their notes, and through the air their mingled music floats. (Poem by Alexander Pope)

For Dall-e-3, 90% of the time, it will just add the poem as the caption in the middle of the image, no matter how I tried to tell it not to do so.

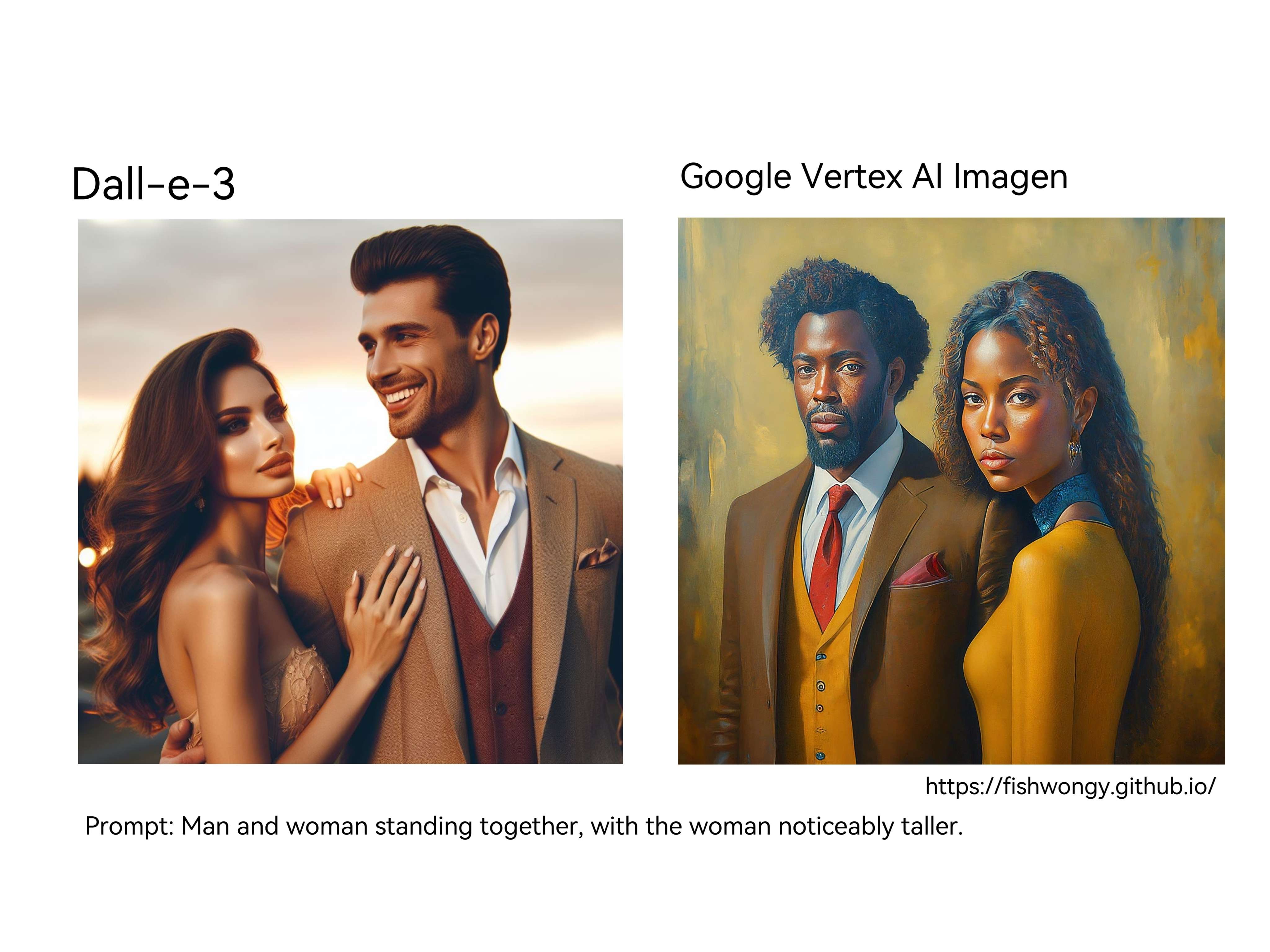

Biased Result When discussing about AI generated images, we have to also talk about the bias in the training data.

Let’s take a look at the below example, I have a prompt of - Man and woman standing together, with the woman noticeably taller.

And the results are as below, obviosly both of the models lack the training data/ images of women being takker than men, so it just generated the results based on the training data it has, which obvious is the opposite of my prompt.

Final Thoughts

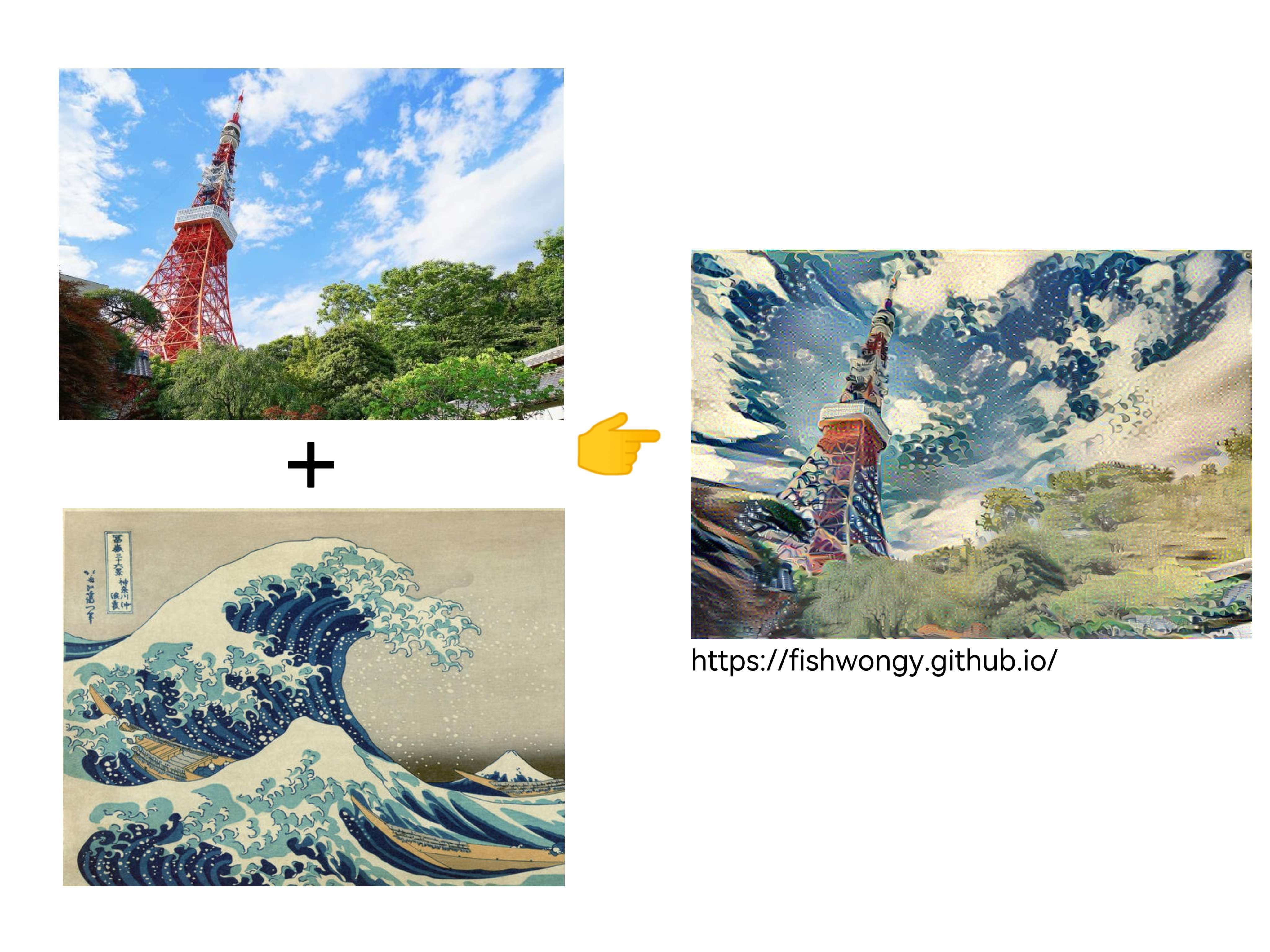

Same as my last post, that I talked about how I used to use tf to generated Shakespeare’s poems and plays few years ago, I’ve also tried to use neural network to generate images based on the paper - Neureal Algorithm of Artistic Style.

The idea from a really high level is that with neural network, I input two images, one is the content image and the other is the style image. The model will then try to generate an image that has the content of the content image and the style of the style image.

Example Image

This is a photo I took in Tokyo integrating with the style of The Great Wave off Kanagawa by Hokusai.

This is a photo I took when I was in Stanford University integrating with the style of The Houses of Parliament, Sunset by Claude Monet.

And using Google’s Vertex AI Imagen, I can generate the below image with the prompt - ‘A painting of Tokyo Tower integrated with the style of The Great Wave off Kanagawa by Hokusai’, in a few seconds.

Now, it is clear that both Google’s Vertex AI Imagen and Dall-e-3 are flawed in their own ways, be it due to the bias from the training data, the lack of training data or other reasons. No doubt we have to be mindful of the results we obtain from these models. But looking back at what I’ve been doing few years ago, generating images without any GPU on my local laptop, waiting so many hours just for 1 image to be generated with the quality like the Tokyo image above. I can’t help but to think how far we have come in the field of image generation.

Thank you for reading and have a nice day!