Google Cloud Functions is a highly scalable serverless FaaS product. Previously, I have used it for some data extraction and cleaning tasks at work. Recently, I have decided to incorporate it into one of my personal projects.

The workflow looks something like below 👀

Cloud Function Setup

So, let’s look into the setup and begin to write some code.

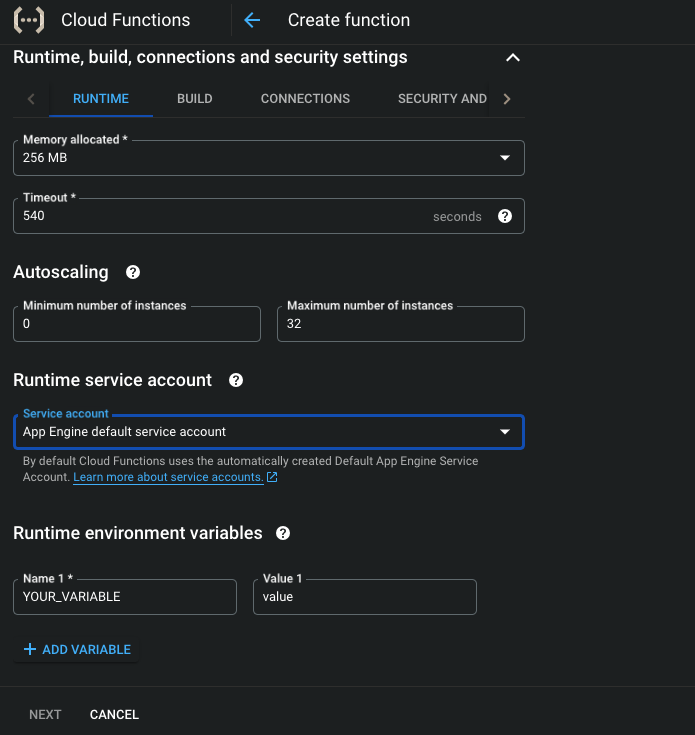

When setting up the cloud function, you will need to have a runtime service account, and depending on development needs, we can also adjust the memory and timeout limit. Optionally, we can set different environment variables and secrets.

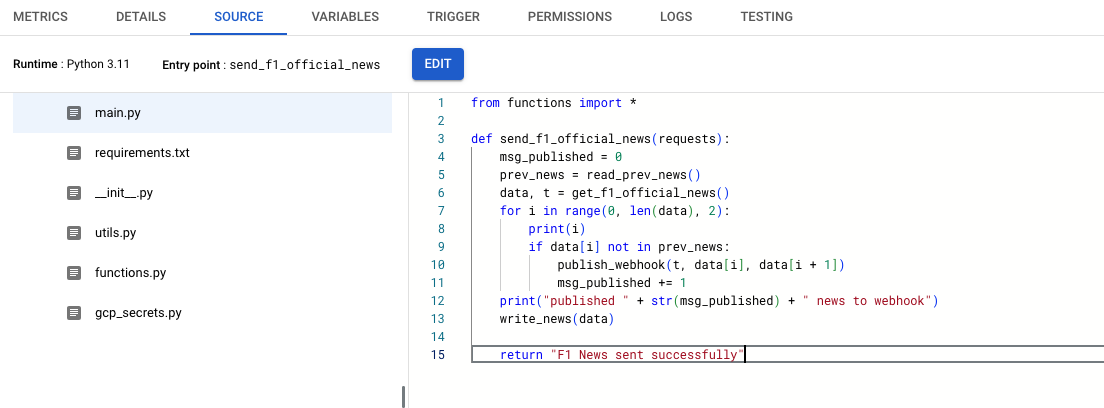

For code structure, we can have something like this,

├── __init__.py

├── requirements.txt

├── utils.py

├── functions.py

├── gcp_secrets.py

└── main.py

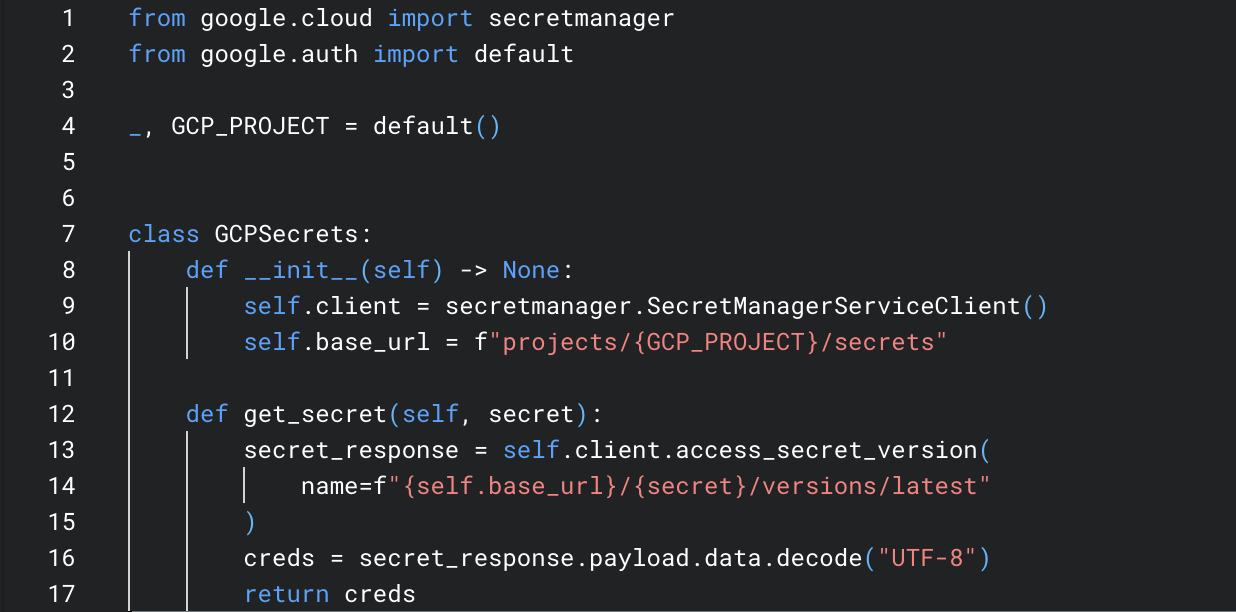

If we need to use any secrets or creds in our code, we can use GCP’s Secret Manager and refernce the secret using the google-cloud-secret-manager library

And when referncing the creds, we can do something like this:

from gcp_secrets import GCPSecrets

secrets = GCPSecrets()

VAR = secrets.get_secret("your-var")

Note that on the cloud function, the entry point has to be the final function that runs in the main.py. And in that function, a parameter request has to be passed in order for the cloud function’s HTTPS to be triggered.

Also, if the function is returning a string at the end, it can’t be anything other than 2XX. I once saw a use case where a cloud function was used for some pipeline QA, and the function is programmed in a way that if the pipelines' QA checks didn’t pass, it returns a non-2XX code. And because of this, the cloud function has been failing until that logic is removed.

Cloud Scheduler Setup

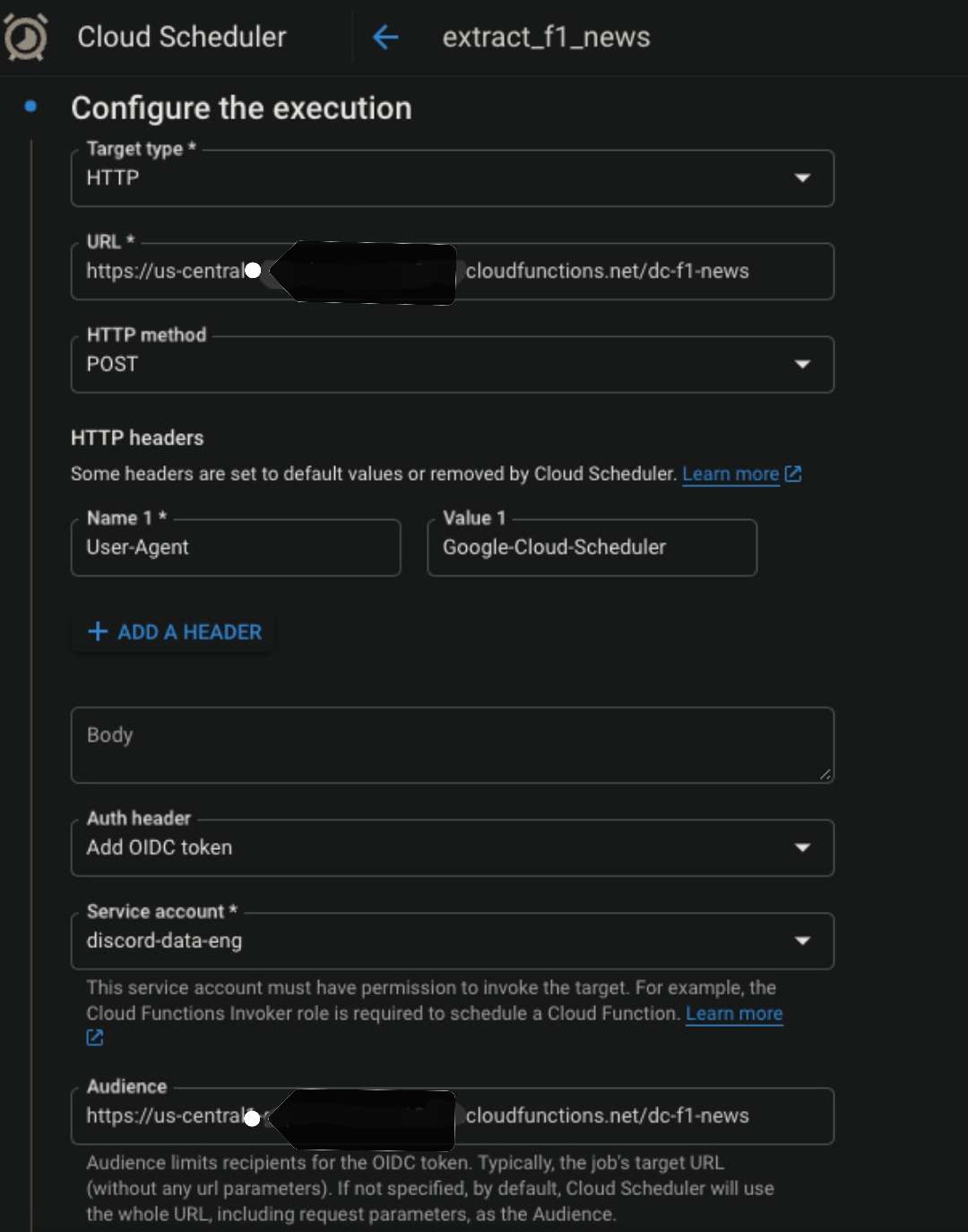

After deploying the cloud function successfully, the next step is to schedule it using GCP’s Scheduler. From our cloud function, we can obtain a Trigger URL under the Trigger Tab, which we can use for setting up our scheduler’s trigger.

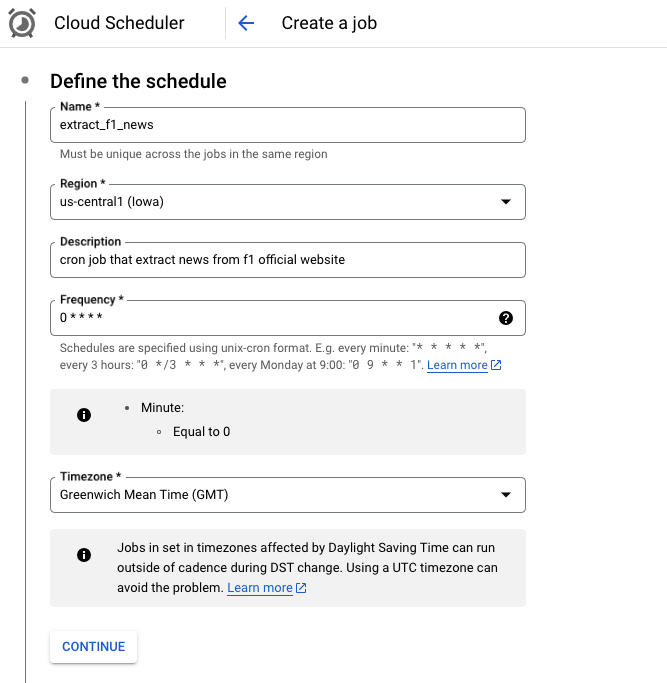

Let’s take a look at the scheduler’s setup.

Here we can see we have to set a frequency in cron syntax for the cronjob; here, I will have the job run hourly.

The URL here is the trigger url that we obtain from the function.

Please note we will need a service account here with sufficient trigger priviledge for the job.

And that’s it! Every hour, the Cloud Function will be triggered. If there is news that hasn’t been published yet, it will be published to the Discord channel!

Git Integration

One thing that I realised when I first used Cloud Functions is that there’s no built-in version control, I saw some of my team members will just wirte their code locally and upload them to GCP.

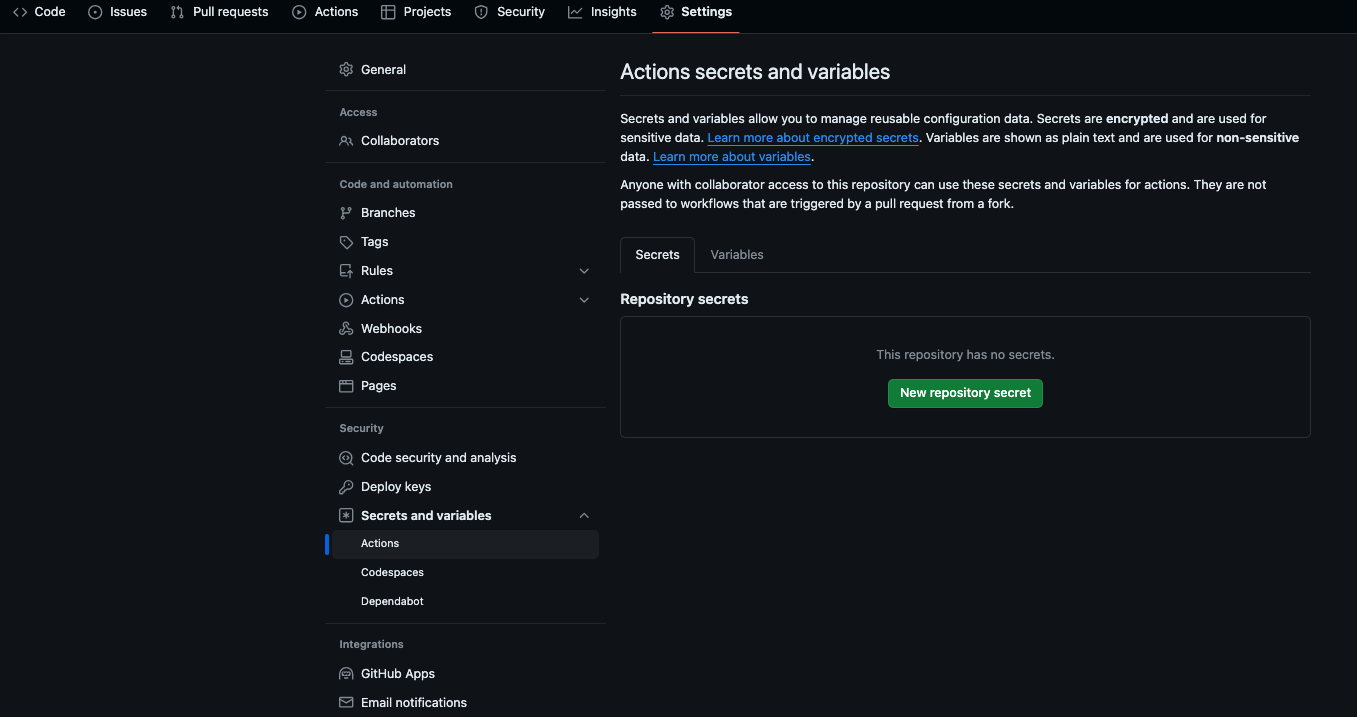

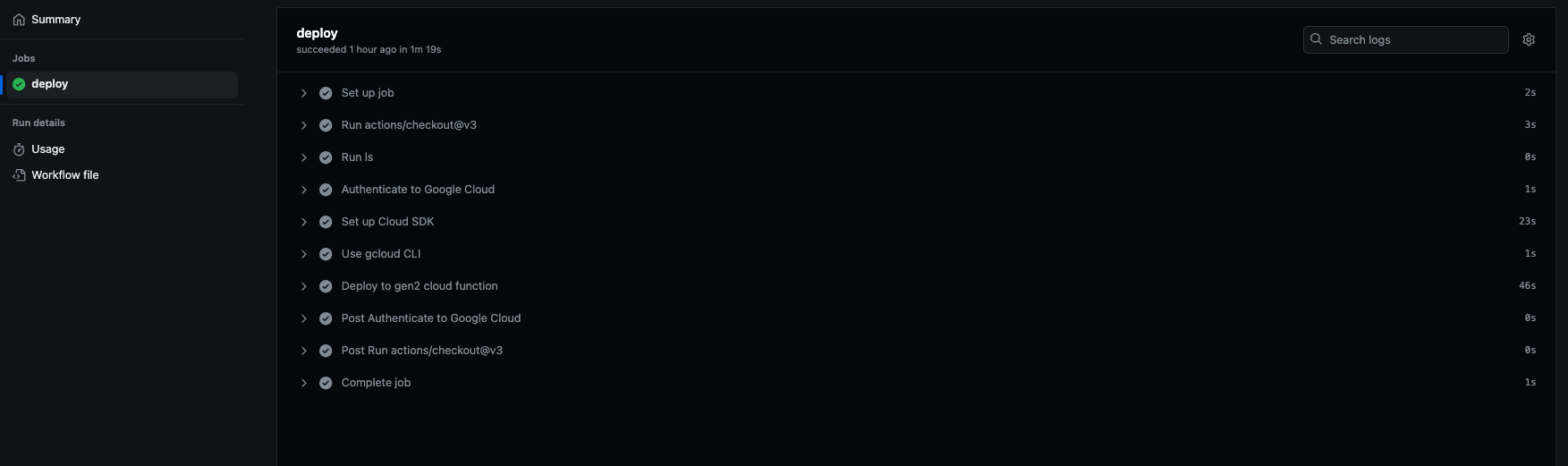

To better handle this, we can use Github Actions so that when there is code pushed or merged to the main branch, the Action will be triggered and update the cloud function.

To do so we will first need the json creds of the service account and store the cred as a repo secret on github. We store it as GCP_SA_DEPLOY_KEY this time and refernce it later.

Then we can have a file at the location .github/workflows/deploy-gcf.yml

The deploy-gcf.yml can look something like this:

Note that I have added the flag --service-account to indicate which service account I want to use for deployment, when it is not specified, it will by default use the App Engine Service Account. Just make sure the service account the deployment is using has all required priviledges granted.

name: Deploy Cloud Functions

on:

pusll_request:

types: [closed]

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Authenticate to Google Cloud

uses: google-github-actions/auth@v1

with:

credentials_json: '${{ secrets.GCP_SA_DEPLOY_KEY }}'

- name: Set up Cloud SDK

uses: google-github-actions/setup-gcloud@v1

with:

version: '>= 363.0.0'

- name: Use gcloud CLI

run: gcloud info - name: 'Deploy to gen2 cloud function'

run: |

gcloud functions deploy <cloud-function-name> \

--gen2 \

--region=us-central1 \

--runtime=python310 \

--source=<source-folder-in-repo> \

--entry-point=<function-in-main-file> \

--trigger-http \

--service-account=svc@gcp-prj-123.iam.gserviceaccount.com

And after the Action finished successfully, the function on GCP will be updated as well.

Some final thoughts…

-

Scheduler is just one of the methods to trigger a Cloud Function. Apart from that, we can also use Pub/Sub to trigger a function. For example, when a new file lands in a GCP bucket, a Cloud Function will be triggered to run.

-

When connecting Cloud Function with BigQuery, I’ve learned that the region of the bucket has to be the same as the region of the dataset in BQ. If not, data can’t be loaded successfully.

That’s it for now.

Thank you for reading and have a nice day!